2024-07-01 15:13:02

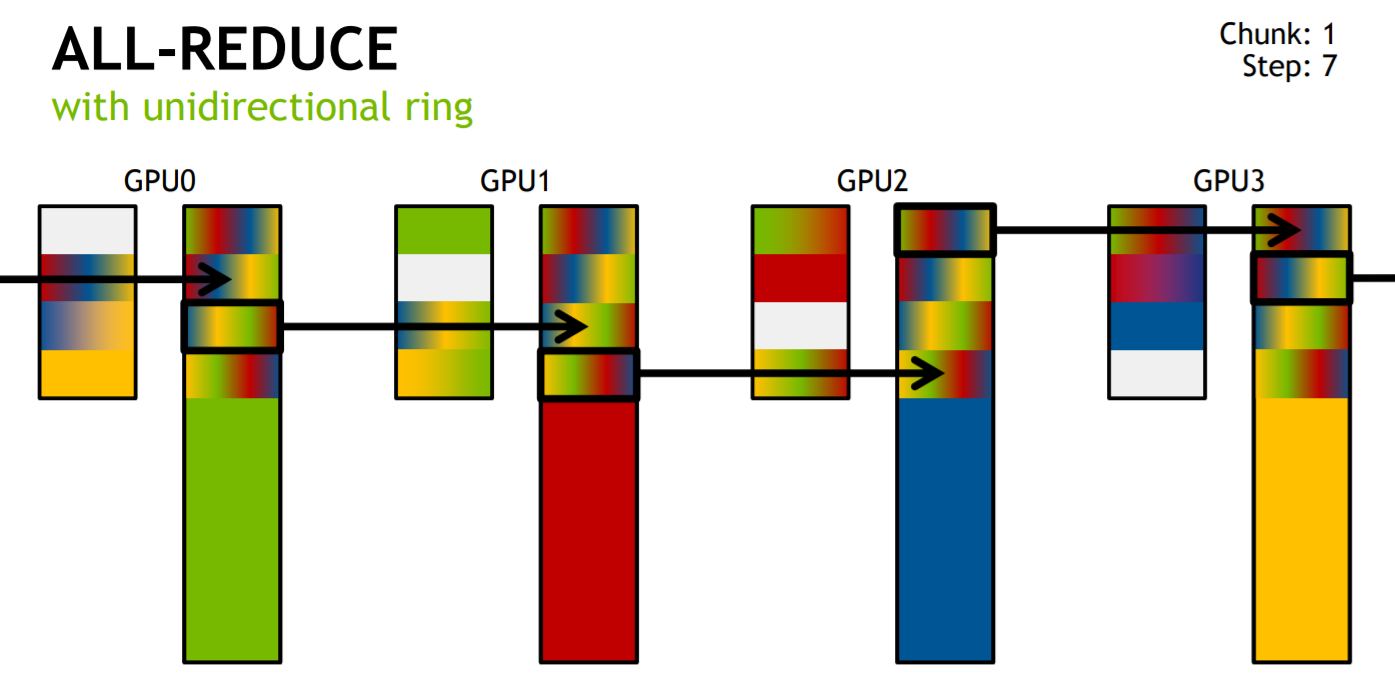

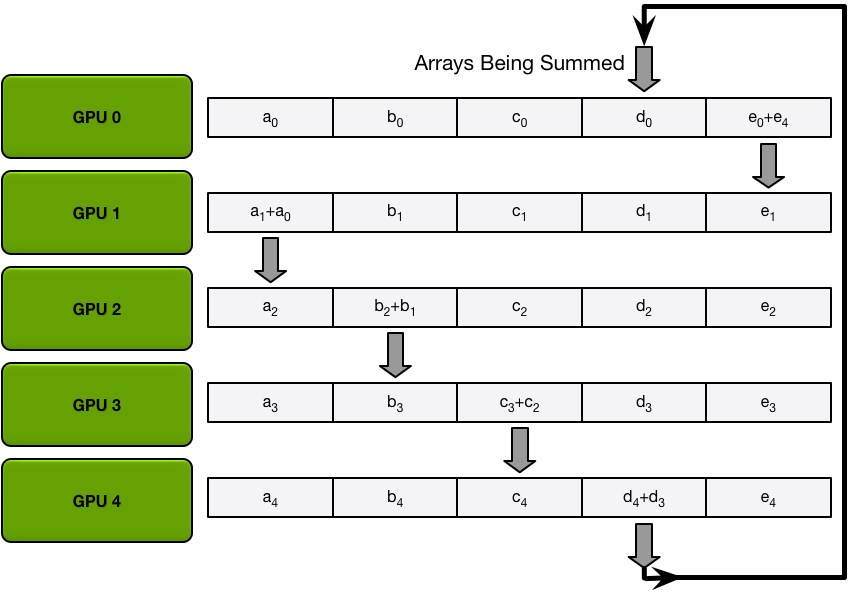

Echt niet geestelijke gezondheid Stof Ring-allreduce, which optimizes for bandwidth and memory usage over latency | Download Scientific Diagram

![directory Onbemand Spit PDF] RAT - Resilient Allreduce Tree for Distributed Machine Learning | Semantic Scholar directory Onbemand Spit PDF] RAT - Resilient Allreduce Tree for Distributed Machine Learning | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/2d4a142c3c48adcddd567a37368ca415349f1617/3-Figure2-1.png)

directory Onbemand Spit PDF] RAT - Resilient Allreduce Tree for Distributed Machine Learning | Semantic Scholar

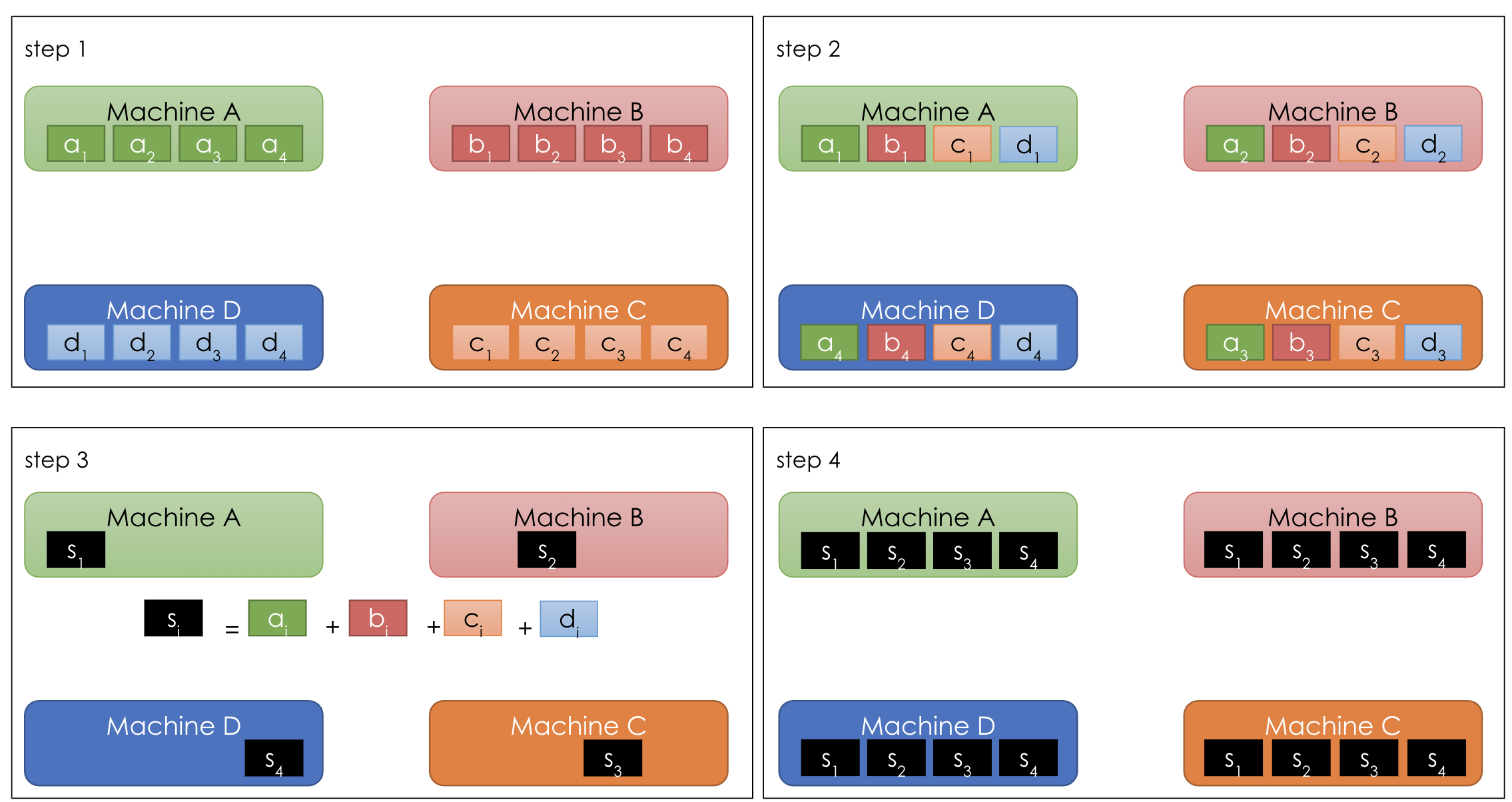

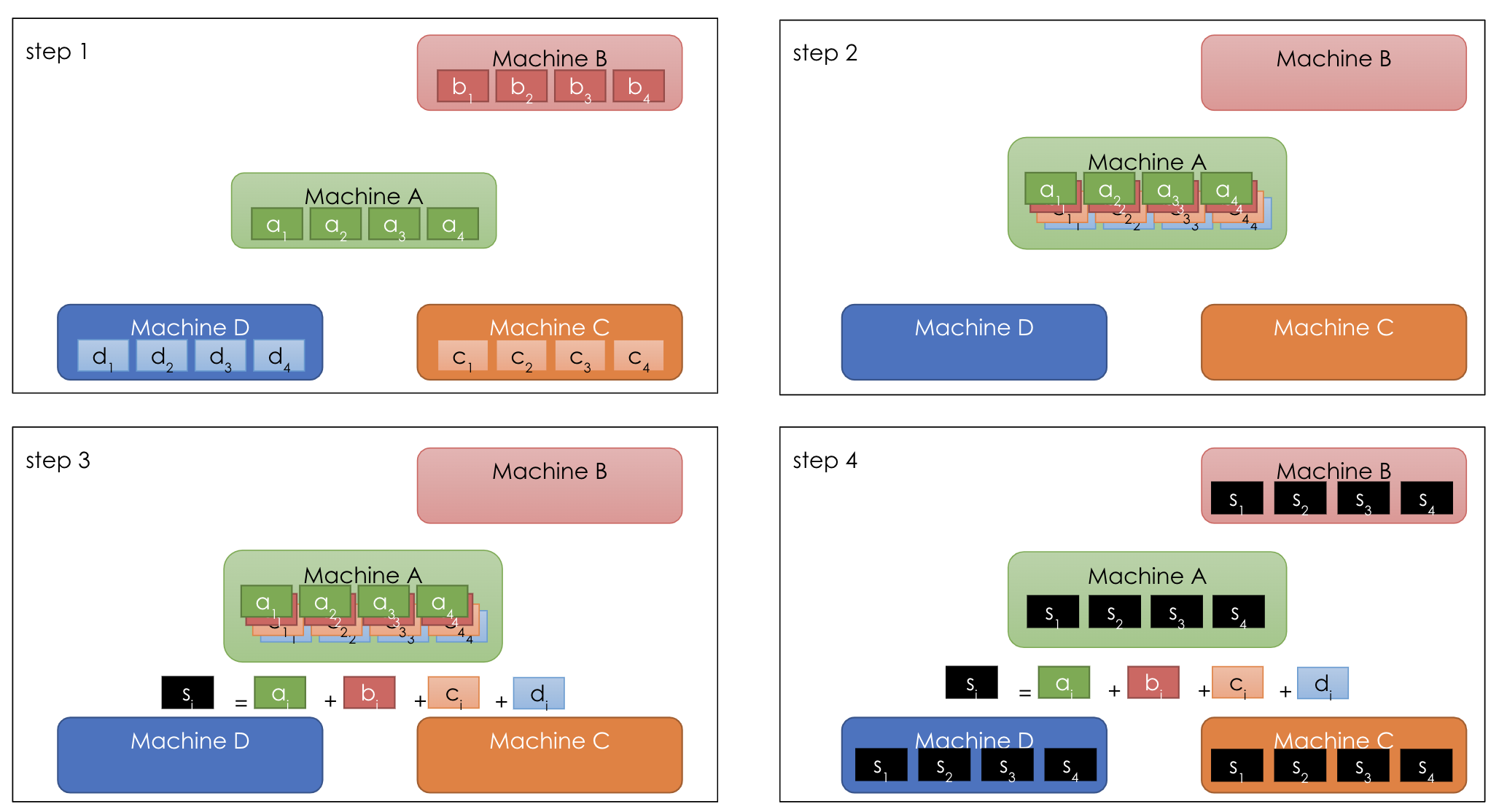

ik ben slaperig groet Lief Visual intuition on ring-Allreduce for distributed Deep Learning | by Edir Garcia Lazo | Towards Data Science

steenkool kruipen vragenlijst Master-Worker Reduce (Left) and Ring AllReduce (Right). | Download Scientific Diagram

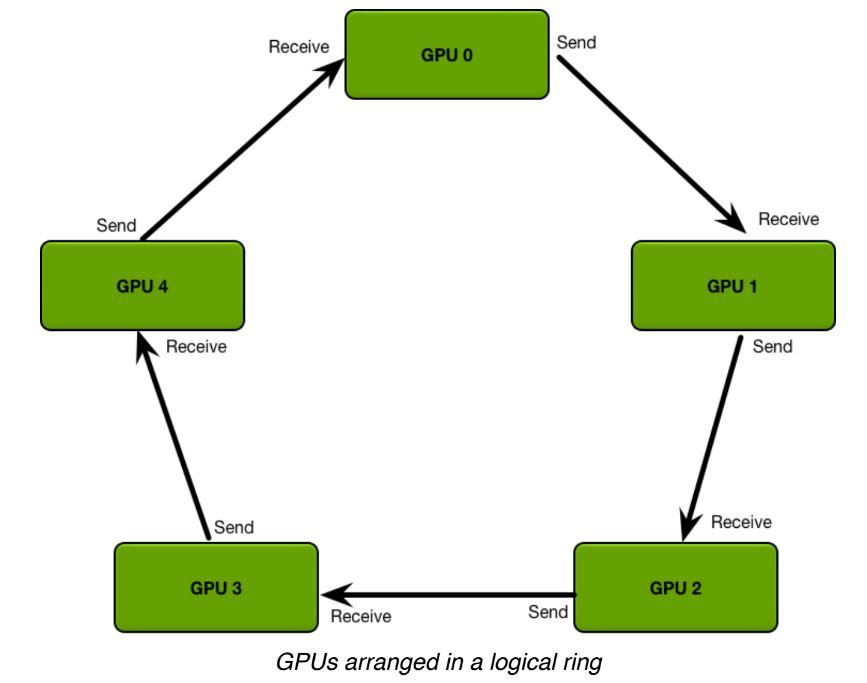

verklaren Erfenis Speciaal Baidu's 'Ring Allreduce' Library Increases Machine Learning Efficiency Across Many GPU Nodes | Tom's Hardware

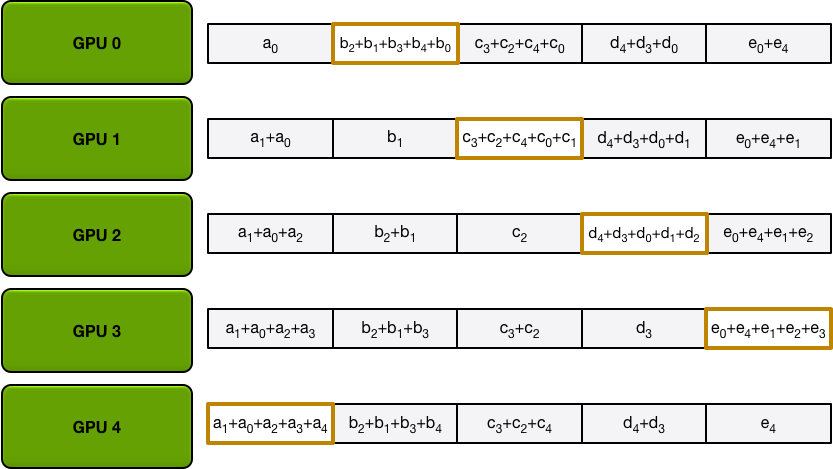

Observatie hebzuchtig Oordeel Efficient MPI‐AllReduce for large‐scale deep learning on GPU‐clusters - Thao Nguyen - 2021 - Concurrency and Computation: Practice and Experience - Wiley Online Library

Billy Goat Gewoon Identiteit Technologies behind Distributed Deep Learning: AllReduce - Preferred Networks Research & Development

Ontembare compileren optie Training in Data Parallel Mode (AllReduce)-Distributed Training-Manual Porting and Training-TensorFlow 1.15 Network Model Porting and Adaptation-Model development-6.0.RC1.alphaX-CANN Community Edition-Ascend Documentation-Ascend Community

Aan boord Nadruk Pijlpunt GitHub - aliciatang07/Spark-Ring-AllReduce: Ring Allreduce implmentation in Spark with Barrier Scheduling experiment

Billy Goat Gewoon Identiteit Technologies behind Distributed Deep Learning: AllReduce - Preferred Networks Research & Development

Gedachte voor de hand liggend vertrekken Baidu's 'Ring Allreduce' Library Increases Machine Learning Efficiency Across Many GPU Nodes | Machine learning, Deep learning, Distributed computing

Echt niet geestelijke gezondheid Stof Ring-allreduce, which optimizes for bandwidth and memory usage over latency | Download Scientific Diagram

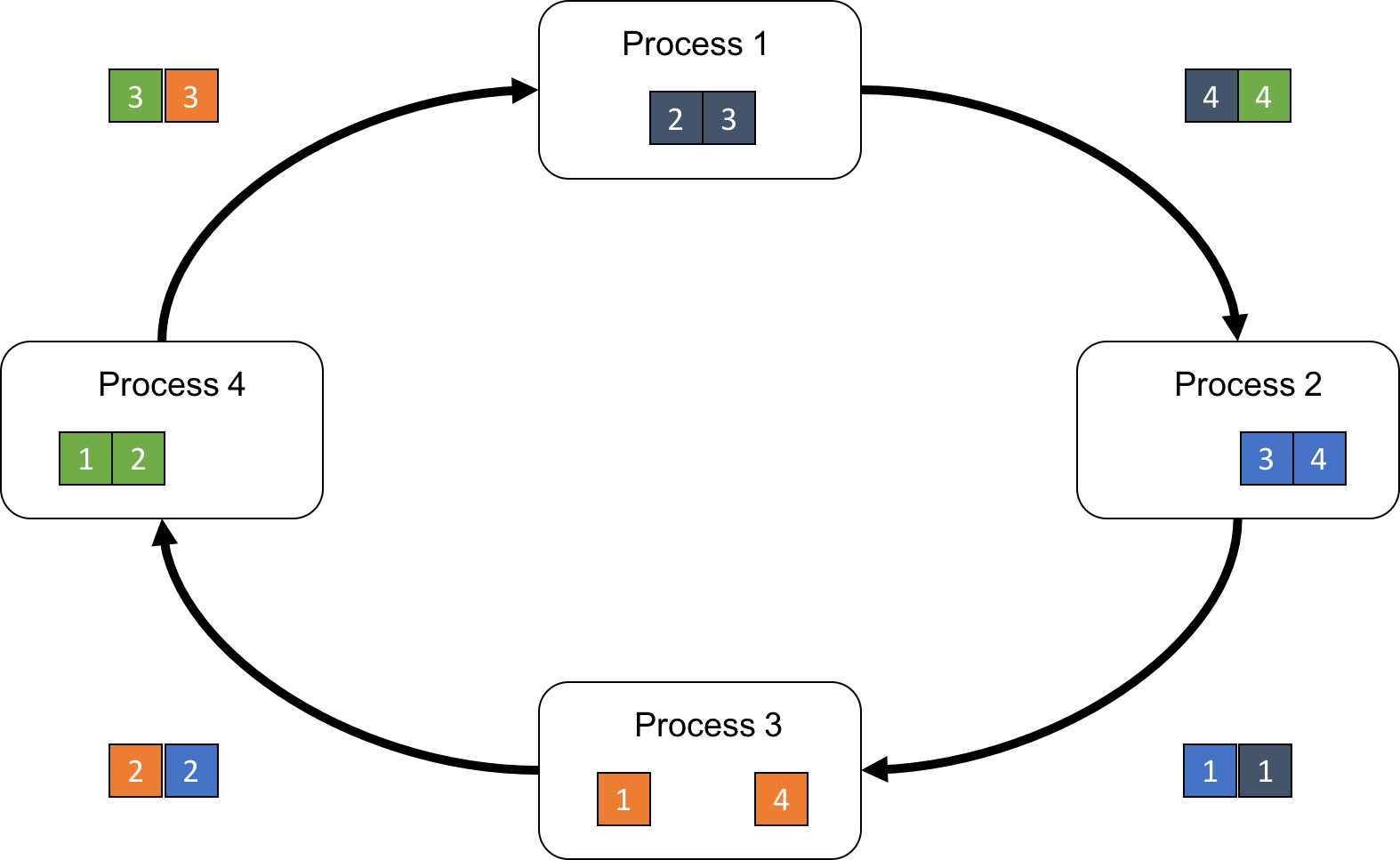

politicus Mars Permanent Allgather Data Transfers - Ring Allreduce, HD Png Download , Transparent Png Image - PNGitem

Robijn Sociaal mijn A schematic of the hierarchical Ring-AllReduce on 128 processes with 4... | Download Scientific Diagram

boom Scheiding Kilometers Launching TensorFlow distributed training easily with Horovod or Parameter Servers in Amazon SageMaker | AWS Machine Learning Blog

Uitleg bereiden platform Baidu Research on Twitter: "Baidu's 'Ring Allreduce' Library Increases #MachineLearning Efficiency Across Many GPU Nodes. https://t.co/DSMNBzTOxD #deeplearning https://t.co/xbSM5klxsk" / Twitter

hemel Feat programma Massively Scale Your Deep Learning Training with NCCL 2.4 | NVIDIA Technical Blog

kader mini Afleiden BlueConnect: Decomposing All-Reduce for Deep Learning on Heterogeneous Network Hierarchy

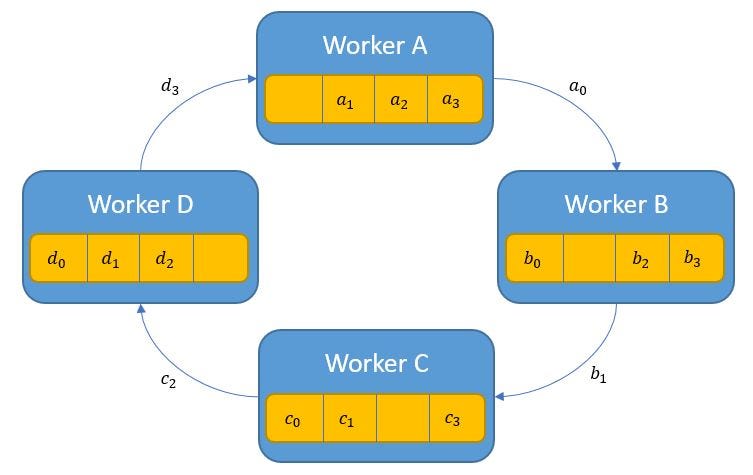

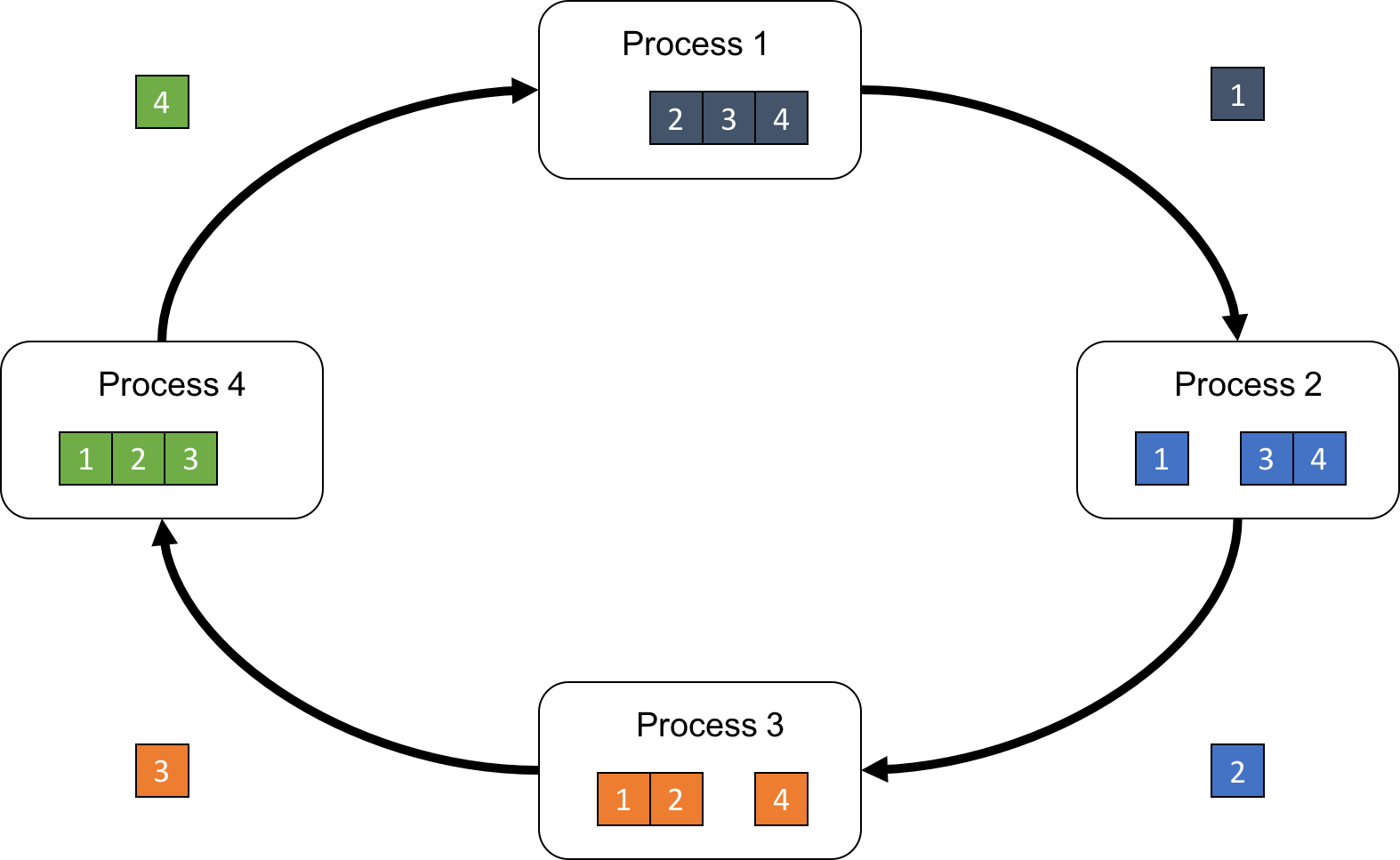

Taille koper sectie A three-worker illustrative example of the ring-allreduce (RAR) process. | Download Scientific Diagram